I tried playing with a cheap dual Xeon system – here’s how it went

Important information

- Choosing a budget Xeon design can work for gaming with high resolutions, since GPUs come before processors in performance.

- Server CPUs can hinder performance in esports titles, as they prioritize single core power and high refresh rates.

- Although not suitable for high refresh rate games, Xeon systems can provide FPS playable at high resolutions with powerful GPUs.

There’s no denying that server networking tools offer top-notch performance when it comes to heavy-duty virtualization tasks. However, gaming is one area where more Xeon and Epyc CPUs tend to falter. To put it bluntly, even the most expensive server processor will easily lose to a standard budget processor when it comes to gaming.

But with older Xeon chips available at lower prices, it’s fun to pick one up. Having recently purchased a dual-Xeon system for my home lab, I decided to test it out with some of the most popular games in my library. If you’re curious, here’s a list of all the things I noticed.

Related

I tried running some macOS apps on Proxmox, here’s how it went

As long as you have a fast processor, enough RAM, and a lot of patience, you can run a lot of amazing apps on your Proxmox Hackintosh.

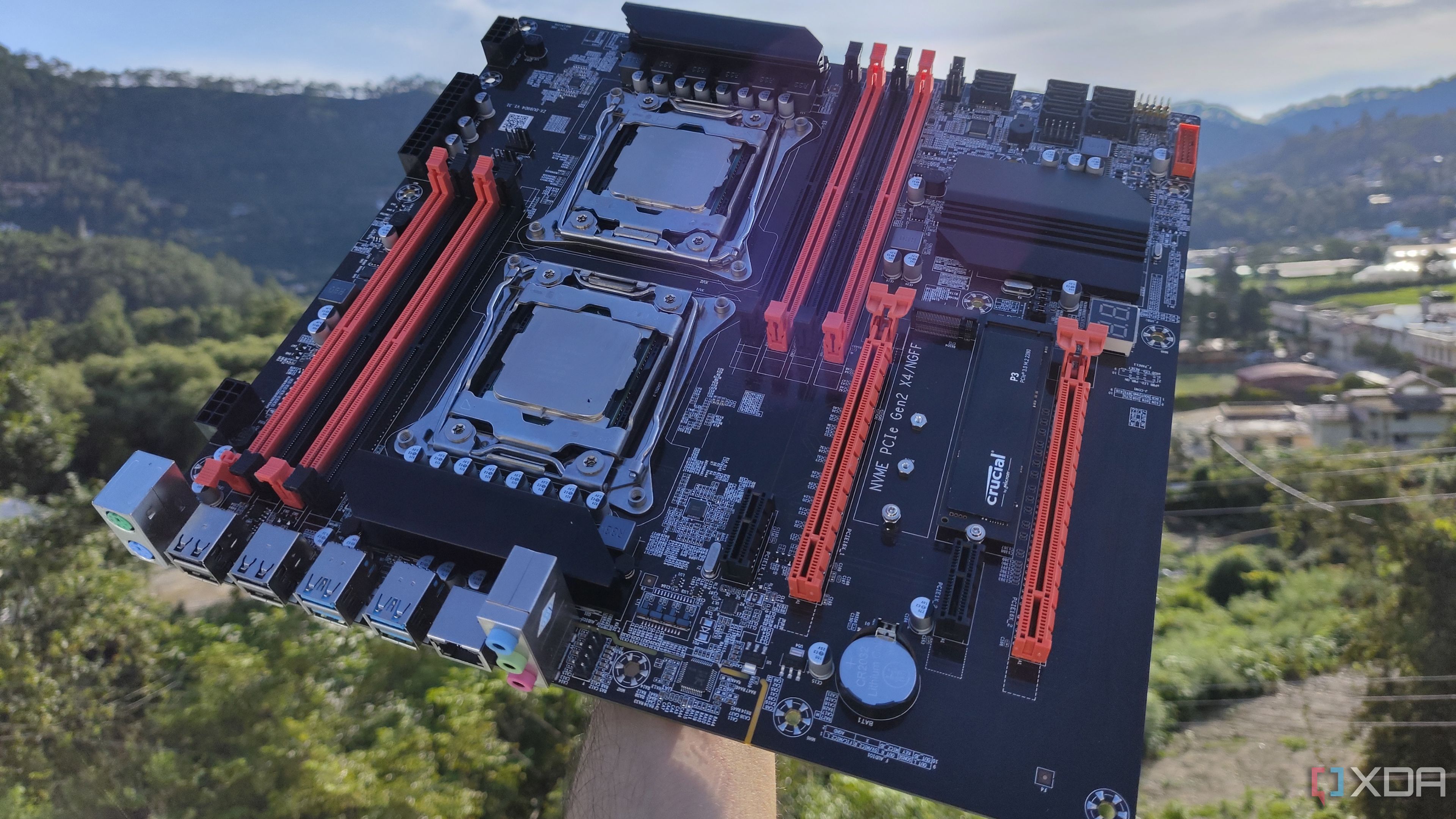

Test bench

Before I post the images and benchmark tables, I would like to go over my test bench data. Since we’re testing budget processors, I ran all my tests on an X99 motherboard with two Intel Xeon E5-2650 v4 processors, with each CPU work in stock conditions. RAM-wise, I used two 32GB ECC-registered DDR4 sticks clocked at 2400MHz in dual-channel mode, although in hindsight, I should have gone for a quad-channel configuration for best performance. For those living in the US, you should be able to find a similar system for less than $250.

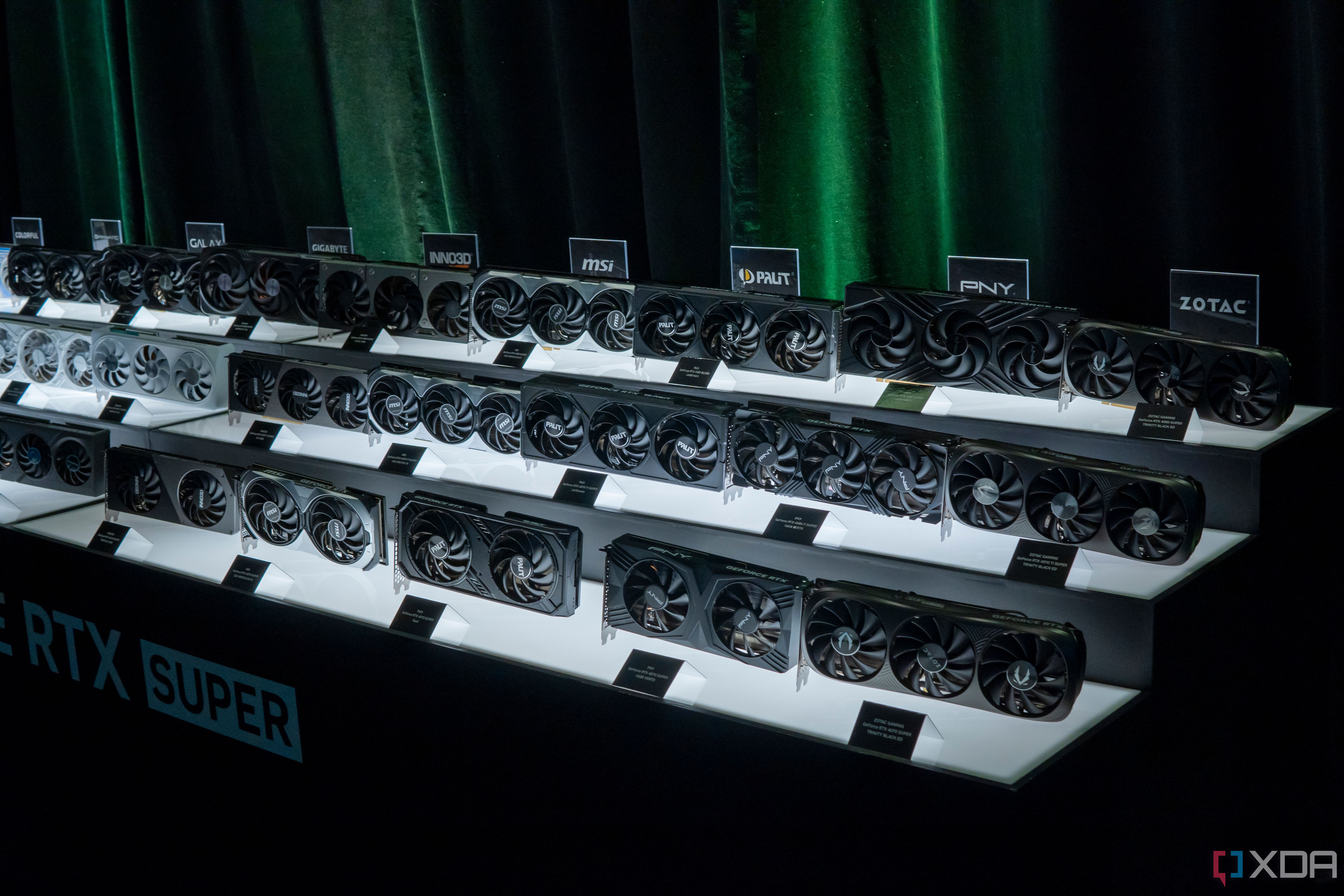

With that out of the way, it’s time to address the elephant in the room: the GPU. For the first tests, I used my old GTX 1080, which still delivers solid renderings at 1080p and can hold its own at 1440p. Since the project would be incomplete if I only used an eight-year-old GPU, I decided to go with my RTX 3080 Ti for the second wave of tests. It’s not an RTX 4090 by any means, but I believe it should serve as a good replacement for a late-gen graphics card. I was also tempted to give my Intel Arc A750 a shot, but decided against it since my X99 mobo doesn’t support Resizeable BAR, and without this feature enabled, the Alchemist family is disabled especially at the front of the process. If you are wondering about the PSU, I used my 1000W Corsair RM1000e PSU to provide maximum power on the test bench.

Related

Which GPU should you buy in every $100 bracket?

There’s something here for everyone, whether you’re a budget user or a gaming enthusiast.

As for the games, I decided to try five titles that look real in my library: Armored Core VI: Rubicon Fires, Baldur’s Gate 3, Cyberpunk 2077, Ring of Fireand Red Dead Redemption 2. I used the highest settings in almost every game, and tested all titles twice on each GPU: once at 1080p and again at 4K.

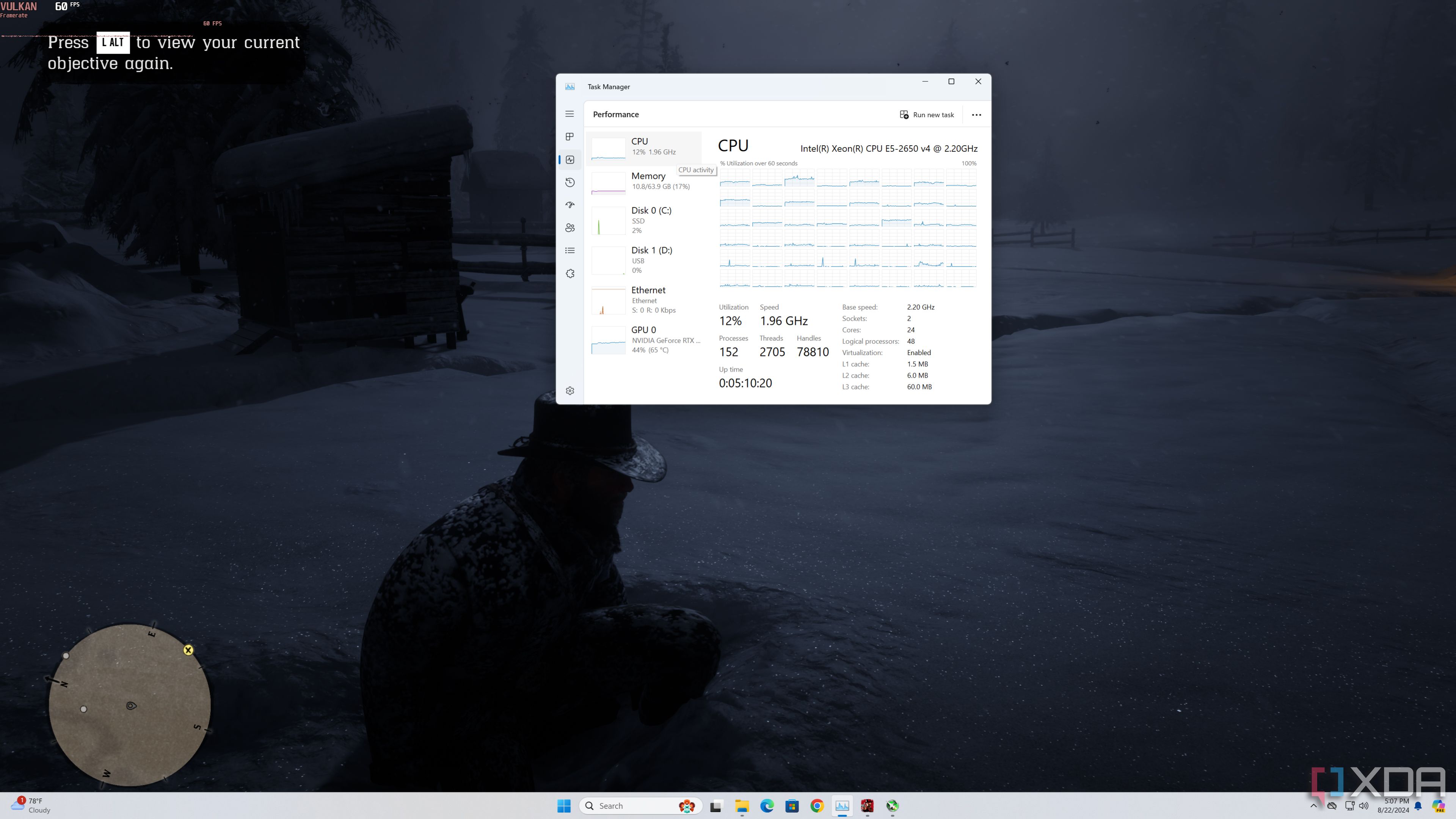

Red Dead Redemption 2

it would keep crashing if I set Volumetric Fog, Screen Space Reflections, and other heavy effects to Ultra on my GTX 1080, so I had to compromise and lower them to Medium/High.

To avoid distorting the results further, I kept the DLSS and ray-tracing settings disabled at all times. Finally, instead of relying on the game’s benchmarking tool, I played each title for a few minutes to get a more accurate reading of GPU usage and FPS.

Unsurprisingly, the GTX 1080 was heavy at 4K

Although the Xeon processors were a problem at 1080p

For the first round of tests involving the GTX 1080, frame rates were more or less what you’d expect from a server PC using an older GPU. In 4K, the games could not be called playable, with the use of the GPU in all titles staying at the limit of 100%, This meant that the system was being held back by the graphics card. Of course, I could have gotten slightly better rates on a bigger processor, but the 4-5 FPS difference doesn’t mean much when you’re playing (if you can call it that) below 20 FPS.

|

GTX 1080 + 2x Xeon E5-2650 v4 |

|

|---|---|

|

Red Dead Redemption 2 (4K, custom settings) |

25 FPS |

|

Red Dead Redemption 2 (1080p, custom settings) |

38 FPS |

|

Cyberpunk 2077 (4K, Ultra settings) |

53 FPS |

|

Cyberpunk 2077 (1080p, Ultra Settings) |

17 FPS |

However, the situation was very different once I turned down the resolution to 1080p. Many restrictive titles Red Dead Redemption 2 and Cyberpunk 2077GPU usage dropped from 100%, meaning that 24-core, 48-thread processors were no longer the problem rather than the GPU.

|

GTX 1080 + 2x Xeon E5-2650 v4 |

|

|---|---|

|

Armored Core VI: Rubicon Fire (4K, Ultra settings) |

34 FPS |

|

Armored Core VI: Fires of Rubicon (1080p, Ultra setting) |

52 FPS |

|

Baldur’s Gate 3 (4K, Ultra Settings) |

24 FPS |

|

Baldur’s Gate 3 (1080p, Ultra Settings) |

57 FPS |

|

Elden Ring (4K, Ultra settings) |

33 FPS |

|

Elden Ring (1080p, Ultra settings) |

46 FPS |

To Baldur’s Gate 3, Armored Core VI: Rubicon Firesand Ring of Firethe process was clearly held by the server CPUs. I don’t want to complicate things by throwing out more numbers, but let’s not talk like that Ring of Fire it consistently hits a solid 60 FPS at 1080p when I pair my GTX 1080 with a Ryzen 5 1600. Meanwhile, Baldur’s Gate 3 and Armor of Core VI it usually exceeds the 60 FPS limit, and depending on the game environment, I’ve seen frame rates at 80 FPS with the same CPU.

With the RTX 3080 Ti, the system was limited only by the CPU

And in almost every game, the FPS was very low at 1080p

After wrapping up the benchmarks on the GTX 1080, I put my RTX 3080 Ti in its place. To Armor of Core VI and Ring of Firethe GPU utilization did not reach the 70% limit, which means that the RTX 3080 Ti was limited by the processor.

|

RTX 3080 Ti + 2x Xeon E5-2650 v4 |

|

|---|---|

|

Armored Core VI: Rubicon Fire (4K, Ultra settings) |

54 FPS |

|

Armored Core VI: Fires of Rubicon (1080p, Ultra setting) |

65 FPS |

|

Elden Ring (4K, Ultra settings) |

37 FPS |

|

Elden Ring (1080p, Ultra settings) |

42 FPS |

I used to play both titles at the same resolution (on Ultra settings) on my main rig, which includes a Ryzen 5 5600X, and as you can imagine, the frame rates were always higher at the 60 FPS mark. Also, lowering the resolution didn’t increase the frame rate much, and all this did was reduce GPU usage.

|

RTX 3080 Ti + 2x Xeon E5-2650 v4 |

|

|---|---|

|

Cyberpunk 2077 (4K, Ultra settings) |

44 FPS |

|

Cyberpunk 2077 (1080p, Ultra Settings) |

63 FPS |

Meanwhile, running Cyberpunk 2077 on 4K Ultra settings it really taxed the last version fighter, as the GPU usage remained at 100%. Switching to 1080p made a huge difference in frame rates, although it’s worth mentioning that even my old Ryzen 5 5600X could easily hit 90+ FPS with the same GPU.

|

RTX 3080 Ti + 2x Xeon E5-2650 v4 |

|

|---|---|

|

Baldur’s Gate 3 (4K, Ultra Settings) |

78 FPS |

|

Baldur’s Gate 3 (1080p, Ultra Settings) |

84 FPS |

|

Red Dead Redemption 2 (4K, custom settings) |

68 FPS |

|

Red Dead Redemption 2 (1080p, custom settings) |

75 FPS |

Now for the more interesting results: Red Dead Redemption 2 and Baldur’s Gate 3 it ran at surprisingly high rates even at 4K. One could argue that I ran RDR2 with slightly optimized settings, but still, I was very surprised by the FPS, as it was very close to what I usually get on my big rig. But everything was fine once I switched to Task Manager and checked the usage of all cores. From the looks of things, both games performed well in handling the load on all cores, resulting in better performance than other titles I tested.

Should you buy a Xeon processor for gaming?

Obviously, the answer is a bit more complicated than screaming no and call it a day. If you are planning to run esports titles, then you should forget about using server CPUs and go for a high-end processor. Similarly, for those who prefer high refresh rates over sharp resolutions, you will be very disappointed with the performance of server-level processors.

On the other hand, if you play at high resolutions, where the GPU is more important than the processor, a cheaper Xeon setup might be what you want. As you have seen, there are several games that can use multiple cores to compensate for the lack of single core performance. When you think about how you can grab some of these processors for cheaper rates, FPS can be useful if you don’t want to spend more than $500 on the CPU alone.

To conclude, while the overall performance varies slightly depending on the title, you can expect FPS played at high resolutions once you combine these CPUs with powerful graphics cards. Keep an eye on the system’s power draw if you don’t want to be blindsided by obnoxiously high electricity bills.

Related

7 things you should know before buying a server PC

Buying a server PC is different than getting a gaming device, and here are seven things to keep in mind before picking one up for your home lab.

#playing #cheap #dual #Xeon #system #heres